The Iterative Cognitive Cycle

Engineering Self-Refining AI for Code and Capital

"The Loop Wins."

Executive Summary

The AI paradigm has shifted from "one-shot" generation to recursive reasoning. This whitepaper dissects the engineering patterns—Reflexion, Chain of Density, and Tree of Thoughts—that transform Large Language Models from stochastic text generators into closed-loop reasoning engines.

With December 2025's frontier models (Gemini 3.0 Deep Think, GPT-5.2 Thinking, Claude Opus 4.5) all embedding "thinking" as a core feature, the architecture of how you prompt matters as much as which model you use.

The Shift: From System 1 to System 2

For a decade, AI operated in "System 1" mode: fast, intuitive, and dangerously confident. You provide a prompt, the model emits tokens in a single forward pass, and whatever comes out is the final answer. This "greedy decoding" approach was computationally cheap but cognitively brittle.

The fatal flaw was Error Cascading. In autoregressive generation, a logical error in step 3 becomes immutable truth for steps 4, 5, and 6. The model cannot backtrack. It cannot reconsider.

We have now entered the era of "System 2" architectures—slow, deliberative, and self-critical. The key insight:

This "Discriminator-Generator Asymmetry" is the theoretical foundation for all self-refining systems. By December 2025, every major AI lab has embedded this principle into their flagship models: Google's Deep Think, OpenAI's Thinking variants, and Anthropic's extended thinking all trade inference-time compute for intelligence.

The State of the Art: December 2025

The past 60 days have redefined what's possible

Three flagship releases have converged on the same architectural insight: thinking as a controllable feature.

| Model | Release Date | SWE-bench Verified | Defining Strength |

|---|---|---|---|

| Gemini 3.0 Pro Deep Think | Dec 4, 2025 | 76.2% | Parallel reasoning, 45.1% on ARC-AGI-2 |

| GPT-5.2 Thinking | Dec 11, 2025 | 80.0% | SWE-bench Pro at 55.6% |

| Claude Opus 4.5 | Nov 24, 2025 | 80.9% | Token efficiency, multi-language dominance |

What These Benchmarks Mean

SWE-bench Verified tests whether a model can autonomously fix real GitHub issues—reading code, understanding context, and generating correct patches. A score of 80% means the model successfully resolves 4 out of 5 real-world software bugs without human intervention.

ARC-AGI-2 measures abstract reasoning on novel puzzles the model has never seen. Gemini 3.0 Deep Think's 45.1% score (with code execution) surpassed GPT-5.1 (17.6%) and Claude Opus 4.5 (37.6%) under identical conditions—a testament to parallel hypothesis exploration.

How It Works: The Feedback Gradient

The engine of any iterative system is the feedback signal—the information that tells the model how to improve.

| Feedback Type | Source | Example | Reliability |

|---|---|---|---|

| Intrinsic (Verbal) | Self-Critique | "The tone is too informal" | Low—subjective |

| Extrinsic (Deterministic) | Tool Output | SyntaxError: line 42 |

High—objective |

| Extrinsic (Simulation) | Environment | Backtest Sharpe: 0.3 | Very High—empirical |

The transition from intrinsic to extrinsic feedback marks the leap from "prompt engineering" to Agentic Workflows. Internal feedback improves style; external feedback grounds the AI in reality.

Frameworks for Immediate Application

1. Chain of Density (CoD): Maximizing Information Entropy

The Problem: AI summaries are verbose and low-density.

The Mechanism: A 5-step iterative loop where the model:

- Identifies Missing Entities (specific names, figures, dates) from the source

- Rewrites the summary to fuse these entities in, without increasing word count

- Repeats until maximum information density is achieved

The Result: Instead of "The CEO discussed APAC headwinds," you get "CEO Smith cited 15% YoY revenue contraction in APAC due to FX headwinds, contrasting with 5% EMEA growth."

2. System 2 Attention (S2A): Filtering Noise and Bias

The Problem: "Sycophancy"—the model agrees with user bias.

The Mechanism: A pre-processing filter separates what the model looks at from what the model thinks about:

- Input: User query mixed with opinion

- S2A Filter: Model rewrites to extract only objective, verifiable claims

- Reasoning: Model answers using only sanitized context

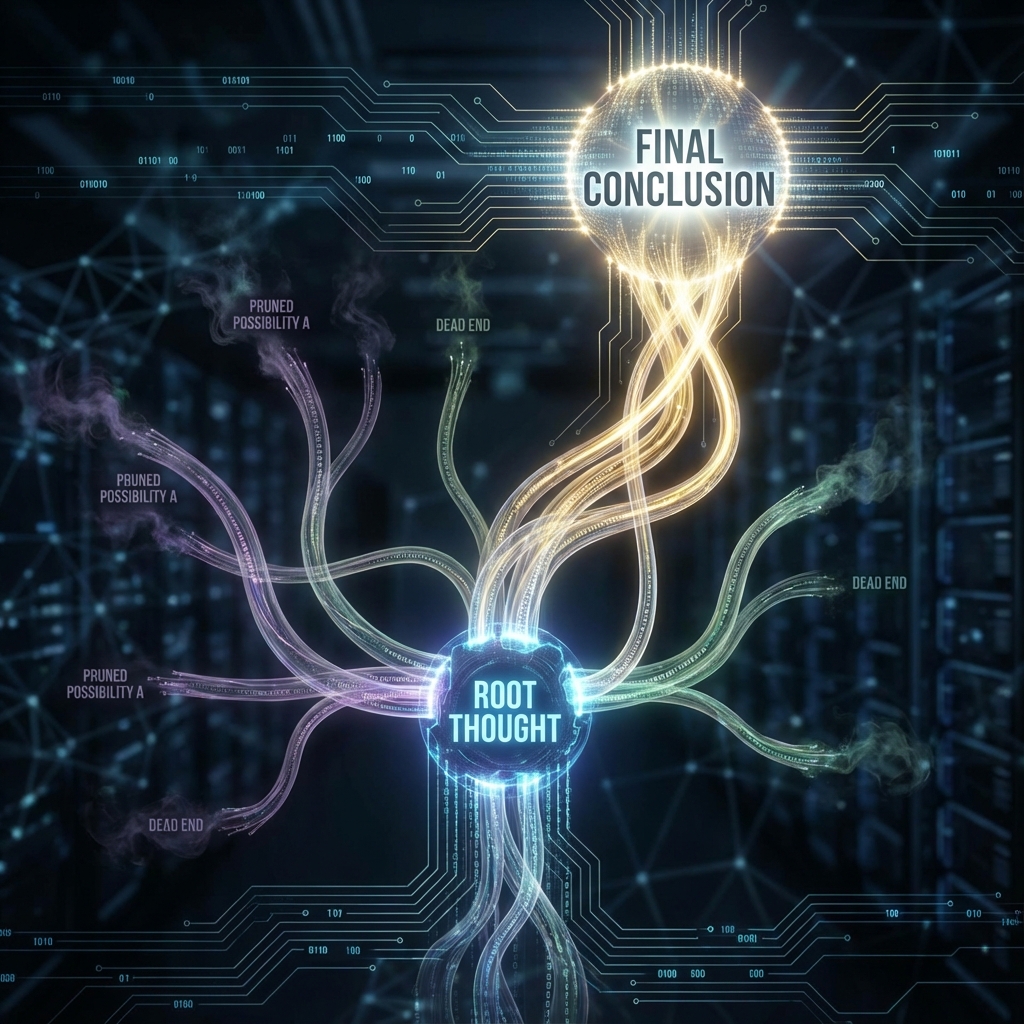

3. Tree of Thoughts (ToT): Exploratory Branching

The Problem: Linear "Chain of Thought" commits to early mistakes.

The Mechanism: A search algorithm applied to reasoning:

- Decompose the problem into discrete steps

- Generate multiple candidate solutions for each step

- Evaluate each candidate (score 1-10)

- Prune low-scoring branches and proceed with winners

Agentic Architectures in Software Engineering

Reflexion: Verbal Reinforcement Learning

Reflexion replaces gradient updates with linguistic memory—the agent "learns" by recording what went wrong in natural language.

The Architecture:

- Actor: Generates code

- Evaluator: Executes tests (pytest/jest)

- Self-Reflection Model: Reads error log, generates "verbal lesson"

- Memory: Appends lesson to episodic memory

- Loop: Actor retries, conditioned on the verbal lesson

Self-Improving Coding Agents (SICA): Recent research shows agents that autonomously edit their own code achieve 17-53% performance gains on SWE-bench subsets by discovering new prompting schemes without human intervention.

Agentic Architectures in Algorithmic Trading

Automated Alpha Mining: The Chain-of-Alpha Framework

Phase 1: Hypothesis Generation

The LLM scans academic papers to propose a natural language hypothesis:

"High institutional ownership + decreasing volatility often precedes breakout"

Phase 2: Translation

alpha = Rank(inst_ownership) + Rank(1 / StdDev(close, 20))Phase 3: Evaluation

The code runs against 10 years of historical data. Metrics: Information Coefficient, Sharpe Ratio,

Maximum Drawdown.

Phase 4: Iterative Refinement

- "IC is 0.01—too noisy" → "Try smoothing with SMA(5)"

- "Sharpe good, but 40% drawdown" → "Add volatility filter: exit when VIX > 30"

FinMem: Memory-Augmented Trading

| Memory Layer | Function | Example |

|---|---|---|

| Working Memory | Real-time stream | Current tick, live headlines |

| Episodic Memory | Long-term events (RAG) | "What happened in 2008?" |

| Procedural Memory | Evolved heuristics | "Stop trading if VIX > 40" |

| Profiling | Agent persona | "Conservative pension fund" |

Implementation Guide: Three Levels

Level 1: The Manual Critic (No Code)

Execute this pattern in any chat interface:

Prompt 1: "Write a Python script to [task]"

Prompt 2: "Act as a Senior Security Engineer. Review for vulnerabilities,

efficiency issues, and PEP8 compliance. List specific flaws."

Prompt 3: "Rewrite incorporating all fixes from your critique."Level 2: The Automated Loop (Python + API)

def iterative_refinement(task: str, max_iterations: int = 3) -> str:

messages = [{"role": "user", "content": task}]

for i in range(max_iterations):

response = llm.generate(messages)

messages.append({"role": "assistant", "content": response})

critique = llm.generate(f"Is this complete and correct? If NO, list issues: {response}")

if "YES" in critique.upper():

return response

messages.append({"role": "user", "content": f"Address these issues: {critique}"})

return messages[-1]["content"]Level 3: Evolutionary Optimization (AlphaEvolve)

Use the LLM as a mutation operator in a genetic algorithm:

- Population: 50 code variants

- Fitness Function: Execution time or test coverage

- Mutation: LLM generates code "diffs" that might improve performance

- Selection: Best variants survive to the next generation

Conclusion: The Loop is Now Native

The December 2025 model releases mark an inflection point. Thinking modes are no longer exotic—they're baseline features. Gemini 3.0 Deep Think, GPT-5.2 Thinking, and Claude Opus 4.5 all ship with built-in iterative reasoning.

This changes the game:

1. The architecture gap is closing. When every model can "think," your competitive advantage shifts from raw model capability to workflow design—how you structure the loop, what feedback signals you provide, and how you ground hallucinations.

2. External validators are mandatory. The models that win on SWE-bench (80%+ accuracy) succeed because they're tested against real code that either compiles or doesn't. Your systems need the same rigor.

3. Specialization is emerging. The "Great Bifurcation" means no single model dominates all tasks. Gemini excels at abstract reasoning, Claude at multi-language code, GPT at broad task coverage. Orchestration beats optimization.

The Loop Wins.