The Evolutionary Operator

When Code Rewrites Itself to Find Alpha

"The question is no longer 'How do I hire more quants?' It is 'How do I build the factory that builds the quants?'"

Executive Summary

The era of the human coding bottleneck is over. Autonomous agents—powered by Large Language Models (LLMs)—are now capable of executing the entire quantitative research lifecycle. They don't just optimize parameters; they write, test, and rewrite the strategy logic itself. This shift from Quantitative Analysis to Quantitative Synthesis is the most significant competitive inflection point in algorithmic trading since the adoption of high-frequency execution.

1. The Bottleneck is You

Imagine a junior quantitative researcher who never sleeps. They don't just backtest ideas you give them—they generate their own. They read the documentation for your backtesting engine, write the Python script, run the simulation, analyze the loss, realize why it failed, and then rewrite the code to fix the logic. They do this 24 hours a day, iterating through thousands of structural variations before you’ve had your morning coffee.

Now imagine you have a thousand of them.

For the last two decades, the "alpha mining" workflow has remained stubbornly human-centric. You have an idea. You write code. You wait. You see a chart. You tweak a parameter. You wait again. The bottleneck isn't data; it isn't compute; it is human cognitive bandwidth and the manual residency of typing code.

We are witnessing a convergence that renders this workflow obsolete. Generative AI has moved beyond "copilots" that help you autocomplete a function. We have entered the era of Autonomous Alpha Mining—systems where the AI acts as the researcher, the engineer, and the critic in a closed, recursive loop.

The question is no longer "How do I hire more quants?" It is "How do I build the factory that builds the quants?"

2. The Paradigm Shift: From Parameter Sweeping to Structure Learning

To understand the magnitude of this shift, we must look at what "optimization" has meant until today.

In the traditional paradigm, logic is fixed. You decide to use a Moving Average Crossover. You then use an optimizer (perhaps a Genetic Algorithm) to find the best window lengths (e.g., 50 vs 200). You are essentially tuning the knobs on a machine you built.

In the Agentic paradigm, logic is fluid. Topics like "structure learning" are no longer abstract academic concepts. The agent doesn't just turn the knobs; it rebuilds the machine.

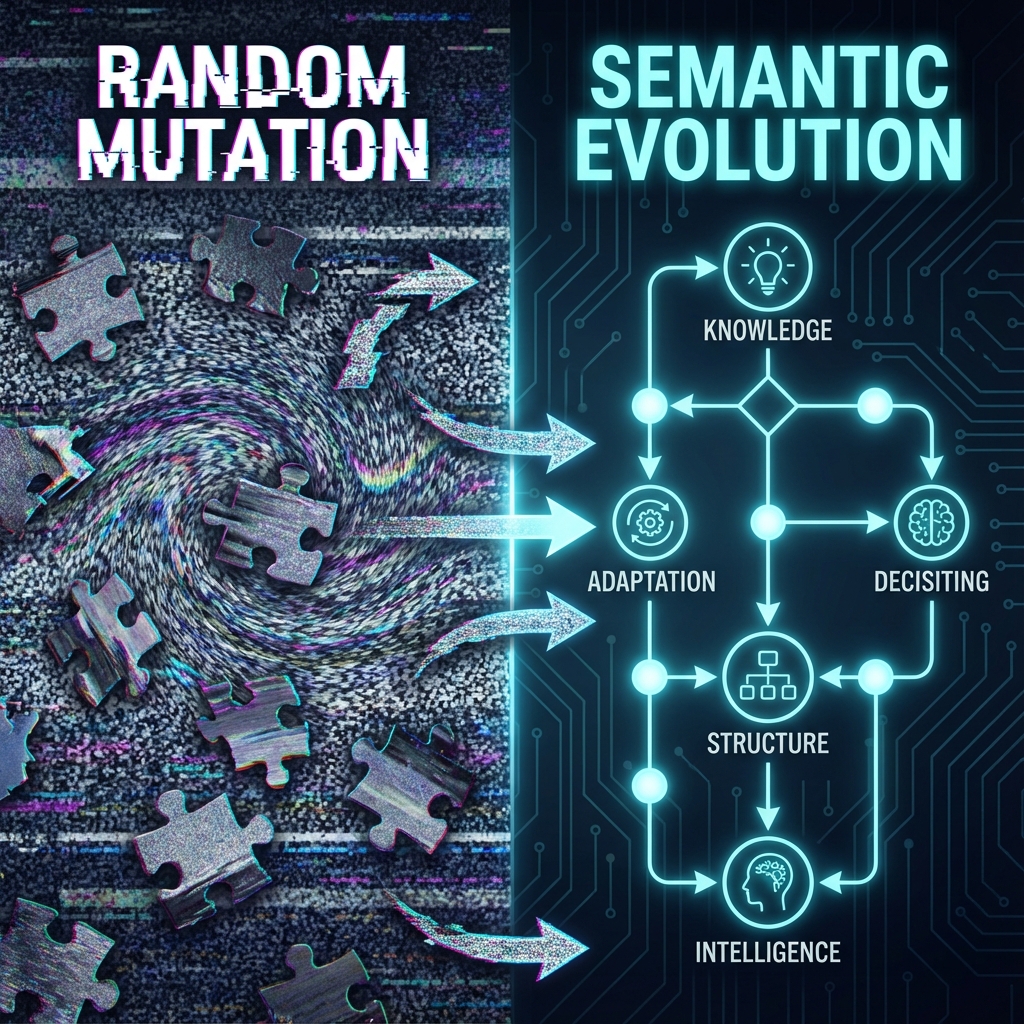

The LLM as an Evolutionary Operator

This is the core breakthrough. Traditional Genetic Programming (GP) tried to evolve code by randomly

flipping bits or swapping text. It was blind. Changing a + to a - was a

guess.

Large Language Models (LLMs) introduce semantic mutation. The agent understands intent.

- Traditional GP: Randomly changes

window_sizetowindow_size + 1. Hope it works. - Agentic Evolution: Reads the backtest logs. Sees that the strategy failed during the 2020 volatility spike. Reason: "I need to filter for high volatility regimes." Action: Intentionally injects a standard deviation filter into the code.

The LLM effectively acts as a "Thinking Mutation" operator. It prunes the search space of infinite possibilities down to the search space of legitimate financial logic. It doesn't guess; it designs.

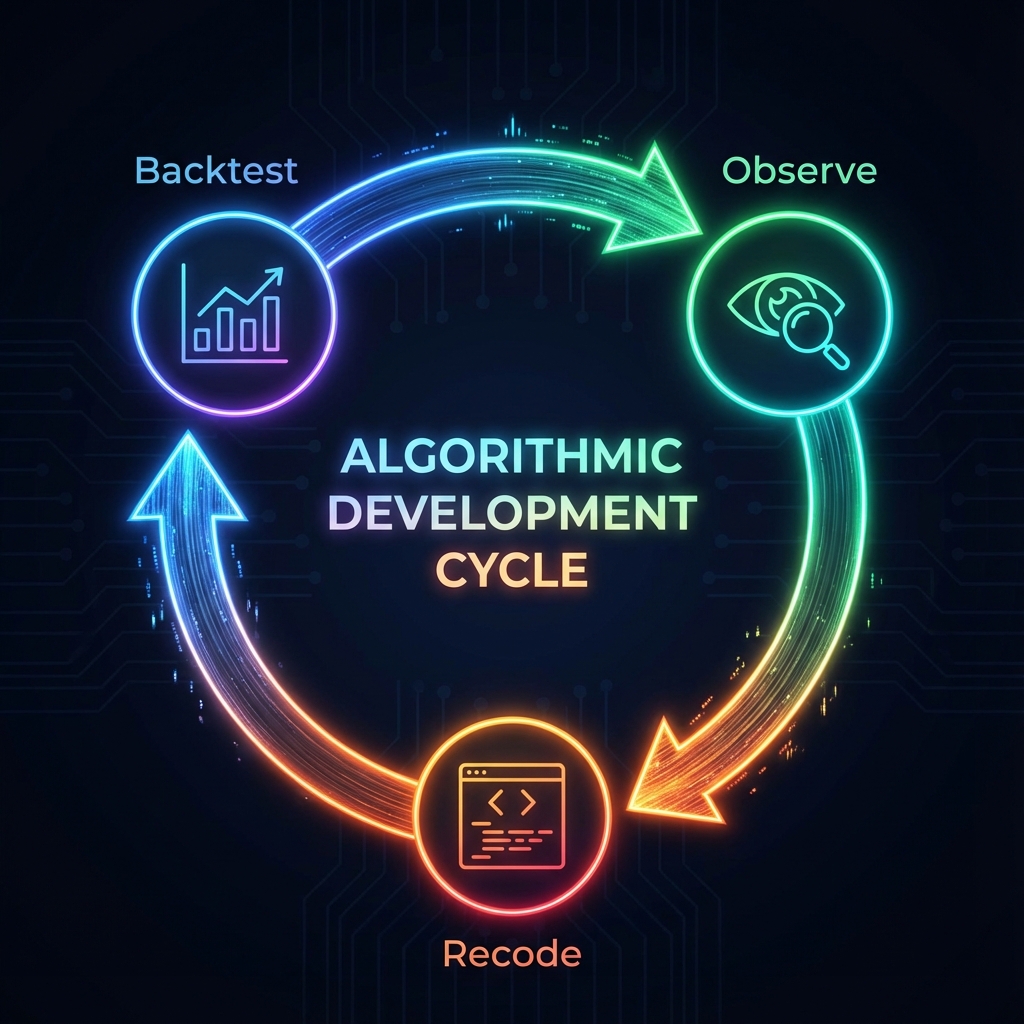

3. How It Actually Works: The Recursive Loop

The magic happens in the Backtest-Observe-Recode loop. This is not a linear process; it is a cybernetic feedback loop reminiscent of how human developers work, but accelerated.

Let’s walk through a single cycle of an autonomous agent:

1. Ideation (The Spark)

The cycle begins with a hypothesis. This can come from a human prompt ("Make a mean reversion

strategy for crypto") or be autonomously retrieved from a vector database of financial literature.

The Agent synthesizes this into a plan.

2. Effectuation (The Code)

The Agent generates executable Python code. It knows the syntax of your backtesting engine (e.g.,

Backtesting.py, VectorBT). It handles imports, class structures, and data feeds. It doesn't just

output text; it outputs a software product.

3. Validation (The Crucible)

The code is executed in a sandboxed environment. This is the reality check.

- Did it crash? (Syntax Error) -> The error log is captured.

- Did it lose money? (Financial Error) -> The performance metrics (Sharpe,

Drawdown) are captured.

4. Observation (The Analysis)

The Agent reads the results. It doesn't just look at the final PnL number. It analyzes the

behavior. "Why did I lose 15% in March?" It looks at the trade logs. "I was long during a

earnings announcement."

5. Recoding (The Evolution)

This is the critical step. The Agent engages in Chain-of-Thought (CoT)

reasoning.

- Diagnosis: "The strategy is over-exposed to event risk."

- Hypothesis: "Adding an earnings calendar filter will reduce drawdown."

- Implementation: The Agent rewrites the source code to inclusively check

the calendar before entering a trade.

The loop restarts. The new code is validated. The strategy has evolved.

4. The Players: Approaches, Not Projects

This isn't just one algorithm; it's a landscape of architectural approaches. The academic literature highlights several key frameworks, each optimizing for a different variable.

| Framework | The Strategy | Best For |

|---|---|---|

| CogAlpha | The Specialist Hierarchy | Interpretability. Uses a rigid hierarchy of agents (Macro, Micro, Fundamental) and a "Judge" agent to prevent logical fallacies like look-ahead bias. |

| Alpha-GPT | The Human Collaborator | Human-in-the-Loop. Includes a "Thoughts Decompiler" that translates the complex evolved math back into English so you can understand what the AI built. |

| MetaGPT | The Software Factory | Robustness. treats the strategy as a software engineering project. Assigns roles like "QA Engineer" to relentlessly test the code before it ever sees a backtest. |

| Chain-of-Alpha | The Explorer/Exploiter | Diversity. distinct "Generation" chains (explore new ideas) and "Optimization" chains (refine existing ones) to prevent getting stuck in local optima. |

5. Implementation Reality: Where It Breaks

You are sold on the concept. Now, how do you actually build it?

The Tech Stack

You need a stack that supports high-speed iteration.

- The Engine:

Backtesting.pyis the current favorite for agents because it is lightweight and contained in a single file—easy for an LLM to read and understand. For scale,VectorBTis the choice for high-performance vectorization. - The Brain:

LangChainorLangGraphto manage the state of the conversation and memory. - The Guardrails: A strict Sandbox (Docker) is non-negotiable. You are executing code generated by an AI; do not run this on your production server root.

The Risks

This technology is powerful, but it is not magic. It introduces specific, dangerous failure modes:

- The P-Hacking Machine: An agent optimized solely for "Maximize Sharpe" acts as

a hyper-efficient data dredger. It will find the perfect curve fit for the noise in your

data.

Fix: You must penalize complexity (Regularization) and force strict out-of-sample validation periods. - Hallucination: LLMs are not compilers. They will invent libraries that don't

exist.

Fix: Implementing the "Self-Correction" loop where Python tracebacks are fed back to the agent is mandatory. - Alpha Decay: Markets change. A strategy evolved on 2020 data may fail in

2025.

Fix: The system must be "Continuous." It is never "done." It is always observing recent data and evolving the codebase.

6. Strategic Implications: The Evolution of the Moat

What does this mean for your business?

Speed is the new Alpha. The days of a quarterly research cycle are over. The firm that can iterate through 10,000 structural strategy variations overnight has a mathematical advantage over the firm that iterates through 10.

The "So What":

- Shift Capital to Compute: Your budget for GPU/API credits should rival your budget for headcount.

- Hire Architects, Not Just Coders: You need staff who can design the agentic workflow, not just write the strategy logic.

- First-Mover Advantage: These systems learn. The longer they run, the more "Deprecated" strategies they archive, and the more refined their internal "World Model" of the market becomes. A feedback loop that has been running for a year is an asset you cannot simply buy.

The transition from human-written to machine-written strategy is not a matter of if, but when. The tools are here. The code is writing itself. The only question is: Is it writing your strategies, or your competitors'?